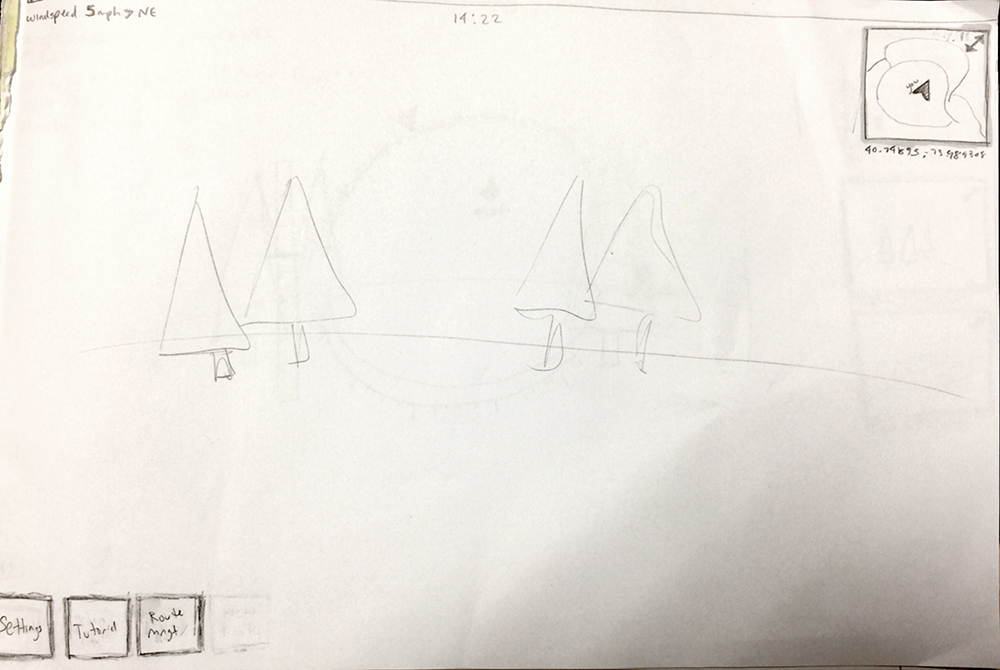

The Problem

Drones have become an integral part of a typical military tactical team’s equipment – helping with surveillance, image capture, and mission success. However, the lack of situation awareness for the pilot is alarming, and can even be deadly in some situations. This is because drones require pilots to be heads-down while navigating hands-on with a clunky controller and monitor.